Tutorial: Build your first neural network from scratch

Published:

In this episode, we want to investigate a simple working neural network (NN) anatomically, without the use of any deep learning package. You might find it very interesting to design every single element that is essential to perform forward and backward propagation. Every piece of component can be put up together like playing a LEGO game!

Getting started

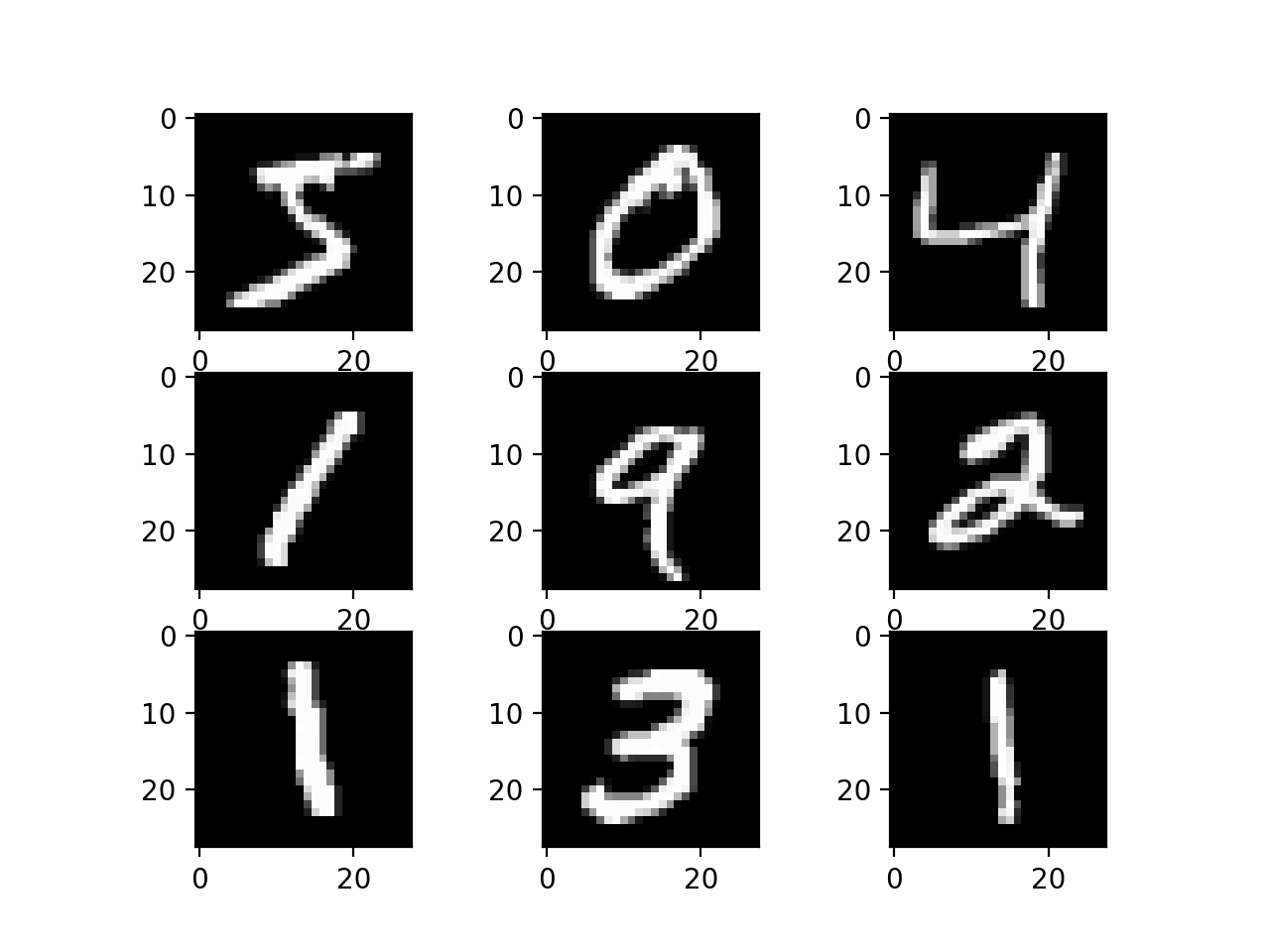

We are going to implement a $L$ layer neural network (multiple layer perceptron) that can perform simple tasks such as digit recognization. $L$ is an arbitrary number as you require, within which we want $L-1$ weight matrices $\boldsymbol{w}^{(i)}$ that link the $i$-th and the $i+1$-th layer, and the last one is the output layer (e.g. a Softmax). We define a data matrix $\boldsymbol{X}$ ($N$ by $M$) that contains $N$ pieces of data with dimension $M$. In the MNIST dataset, each image is 28 by 28 pixels, so we have a dimension of 784. We also have a target $\boldsymbol{Y}$ ($N$ by $K$), a matrix of $K$-category one-hot label. We will do this step by step via specification of every component and giving an implementation in MATLAB. Before proceeding, it is recommended to have a look at the lecture note.

In this tutorial, our MLP is constructed in the form \begin{equation} \hat{\boldsymbol{Y}}=\operatorname{Softmax}(\boldsymbol{w}^{(L-1)} \sigma(\boldsymbol{w}^{(L-2)}\cdots\sigma(\boldsymbol{w}^{(2)}\sigma(\boldsymbol{X}\boldsymbol{w}^{(1)}+\boldsymbol{b}^{(1)})+\boldsymbol{b}^{(2)})\cdots+\boldsymbol{b}^{(L-2)})). \end{equation} Since we are dealing with data matrices rather than vectors, to make more efficient computation we try to enable every MATLAB function to do element-wise computation with matrices or tensors. Recall from your data science lectures, these are some important functions that compose our neural network.

- Sigmoid \(\begin{equation} \sigma(t)=\frac{1}{1+\exp (-t)} \end{equation}\)

function g = sigmoid(T) g = 1 ./ (1+exp(-T)); end - Derivative of Sigmoid \(\begin{align}\frac{d \sigma(t)}{d t}=\sigma(t)(1-\sigma(t))\end{align}\)

function g = dsigmoid(T) g = zeros(size(T)); g = sigmoid(t).*(1-sigmoid(T)); end - Softmax \(\begin{equation} \operatorname{Softmax}\left(\boldsymbol{t}\right)=\left(\frac{\exp \left(t_1\right)}{\sum_{k=1}^K \exp \left(t_k\right)}, \cdots, \frac{\exp \left(t_K\right)}{\sum_{k=1}^K \exp \left(t_k\right)}\right) \end{equation}\)

function y = softmax(T) y = exp(T) ./ sum(exp(T),2); %denominator sum by row end - Cross Entropy (This will be our loss function) \(\begin{equation} \mathcal{L}(\boldsymbol{Y},\hat{\boldsymbol{Y}})=\frac{1}{N} \sum_i^N \sum_j^K -y_{i k} \log \left(\hat{y}_{i k}\right) \end{equation}\)

function C = crossentropy(Y,Y_hat) C = sum(sum(-Y.* log(Y_hat))) / size(Y, 1) ;

Initialise Weights

The initialization only needs to specify the size of layers (number of neurons in each layer), say, layers = [input_size,hidden_size ,..., hidden_size , output_size]. Note that, we need to reserve one more dimension in every weight matrix for the bias coefficients. All initial weight entries can be sampled from a Gaussian distribution, say, $\mathcal{N}(0,0.1)$.

function ini_weights = randWeights(layers)

for i = 1:(size(layers, 2)-1)

epsilon = 0.1;

ini_weights{i} = rand(layers(i+1), 1+layers(i))*2*epsilon - epsilon;

end

end

Forward Propagation

Here are some definitions of intermediate variables.

$\boldsymbol{z}^{(i)}=\boldsymbol{a}^{(i-1)}\boldsymbol{w}^{(i-1)}$, linear production at the $i$-th layer;

$\boldsymbol{a}^{(i)}=\sigma(\boldsymbol{z}^{(i)})$, intermediate outcome at the $i$-th layer;

Firstly, specify the input layer $\boldsymbol{a}^{(1)}$. For convenience, we append one more column of all ones to all intermediate outcomes from every layer as the bias term $\boldsymbol{b}$. \(\begin{equation}\boldsymbol{a}^{(1)}=\left[\begin{array}{cc} 1&\boldsymbol{X}_1\\ \cdots&\cdots\\ 1&\boldsymbol{X}_N \end{array}\right]=\left[\begin{array}{cc} \boldsymbol{1}&\boldsymbol{X}\\ \end{array}\right], \end{equation}\) where $\boldsymbol{1}$ represent a column vector of ones. Now we generate a sequence of hidden layers from $(\boldsymbol{z}^{(2)},\boldsymbol{a}^{(2)})$ to $(\boldsymbol{z}^{(L-1)},\boldsymbol{a}^{(l-1)}).$ \(\begin{equation} \begin{aligned} \boldsymbol{z}^{(l)}&=\boldsymbol{a}^{(l-1)}\cdot\boldsymbol{w}^{(l-1)^\top}\\ \boldsymbol{a}^{(l)}&=\left[\begin{array}{cc} \boldsymbol{1}&\sigma(\boldsymbol{z}^{(l)})\\ \end{array}\right] \end{aligned} \end{equation}\) Compute the output layer $\boldsymbol{z}^L$ and $\boldsymbol{a}^L$, \(\begin{equation} \begin{aligned} \boldsymbol{z}^{(L)}&=\boldsymbol{a}^{(L-1)}\cdot\boldsymbol{w}^{(L-1)^\top}\\ \boldsymbol{a}^{(L)}&=\operatorname{Softmax}(\boldsymbol{z}^{(L)}) \end{aligned} \end{equation}\) Compute the total lost, \(\begin{equation} \mathcal{L}\left(\boldsymbol{Y},\boldsymbol{a}^{(L)}\right). \end{equation}\)

function [C,a,z] = forward(X,Y,layers,weights)

a1 = [ones(size(X,1), 1) X]; %design input

C = 0; %initialise cost

for i = 1:(size(layers,2)) %initialize the sum and the activations

z{i} = zeros(size(X,1),layers(i)+1);

a{i} = zeros(size(X,1),layers(i)+1);

end

a{1} = a1; %input layer

for i = 2:size(layers,2) %hidden layers

z{i} = a{i-1}*weights{i-1}';

a{i} = z{i};

if i ~= size(layers,2) %(eq 1.6)

a{i} = sigmoid(z{i});

a{i} = [ones(length(z{i}),1) a{i}]; %append bias for next layer

else

a{i} = softmax(a{i}); %output layer (eq 1.7)

end

end

C = crossentropy(Y,a{end}); %compute cost eq.(eq 1.8)

end

Backward Propagation

First, look at the gradient of cross-entropy w.r.t the weights from the last layer, \(\begin{equation} \begin{aligned} \frac{\partial}{\partial\boldsymbol{w}} \mathcal{L}(\boldsymbol{w}) &=\frac{\partial}{\partial\boldsymbol{w}} \left(\frac{1}{N}\sum_{i=1}^N \sum_{k=1}^K -y_{i, k}\left[\log \left(\frac{\exp \left(\mathbf{a}_i \boldsymbol{w}^{\top}_k\right)}{\sum_{l=1}^K \exp \left(\mathbf{a}_i \boldsymbol{w}^{\top}_l\right)}\right)\right]\right) \\ &=\frac{1}{N}\left[\begin{array}{ccc} \frac{\exp \left(\mathbf{a}_i \boldsymbol{w}^{\top}_1\right)}{\sum_{l=1}^K \exp \left(\mathbf{a}_i \boldsymbol{w}^{\top}_l\right)} & \cdots & \frac{\exp \left(\mathbf{a}_i \boldsymbol{w}^{\top}_K\right)}{\sum_{l=1}^K \exp \left(\mathbf{a}_i \boldsymbol{w}^{\top}_l\right)} \end{array}\right]-\frac{1}{N}\boldsymbol{y}_i \\ & \equiv \frac{1}{N} \left[\frac{\exp \left(\mathbf{a}_i \boldsymbol{w}^{\top}\right)}{\sum_{l=1}^K \exp \left(\mathbf{a}_i \boldsymbol{w}^{\top}_l\right)}-\boldsymbol{y}_i\right]\\ & \equiv \frac{1}{N}\left(\boldsymbol{a}^{(L)}-\boldsymbol{Y}\right). \end{aligned} \end{equation}\) This is the difference between the predicted outcome and the true label. Next, we consider the gradient w.r.t the weights from the hidden layers. Compute $\boldsymbol{\delta}^{(L)}$ first, \(\begin{equation} \boldsymbol{\delta}^{(L)}=\frac{\partial}{\partial\boldsymbol{w}^{(L-1)}} \mathcal{L}(\boldsymbol{w}^{(L-1)})=\frac{1}{N}\left(\boldsymbol{a}^{(L)}-\boldsymbol{y}\right). \end{equation}\) Why and how do we calculating this $\boldsymbol{\delta}$ ? You can find the answer in the chain rule. Generate the whole sequence of deltas from $\boldsymbol{\delta}^{(L)}$ to $\boldsymbol{\delta}^{(1)}$. Note that the order is reversed compared with forward propagation. \(\begin{equation} \boldsymbol{\delta}^{(l)}=\left(\boldsymbol{\delta}^{(l+1)}\cdot\boldsymbol{w}^{(l)^{\top}} \right) \odot \sigma^{\prime}\left(\boldsymbol{z}^{(l)}\right) \end{equation}\) Then compute gradients at each layer, \(\begin{equation} \frac{\partial \mathcal{L}}{\partial \boldsymbol{w}^{(l)}}=\boldsymbol{\delta}^{(l+1)^{\top}} \mathbf{a}^{(l)} \end{equation}\)

function [gradient] = backward(a,z,layers,Y,weights)

for i = size(layers,2):-1:2 %in backward direction

if i == size(layers,2)

delta{i} = (a{i} - Y)./size(Y,1); %first gradient of cross entropy (eq 1.10)

else

%passing delta iteratively (eq 1.11)

delta{i} = (delta{i+1}*weights{i}(:, 2:end)) .* sigmoidGradient(z{i});

end

gradient{i-1} = delta{i}'*a{i-1}; %compute gradient in terms of weights (eq 1.12)

end

end

Gradient Descent

This is presented as one step of training, which is composed of one step of forward & backward propagation and one step of updating weights using gradient descent, \(\begin{equation} \boldsymbol{w}_{t+1}=\boldsymbol{w}_t- \alpha\nabla_{\boldsymbol{w}} \mathcal{L}\left(\boldsymbol{w}_t\right), \end{equation}\) where $\alpha$ is a constant learning rate. To perform one step of gradient descent, we need to gather all the gradients we’ve just computed from the back propagation. Remember, this is the full weight tensor \(\begin{equation} \boldsymbol{w}=\left[\begin{array}{llll} [\boldsymbol{w}^{(1)}] & [\boldsymbol{w}^{(2)}] & \cdots &[\boldsymbol{w}^{(L-1)}] \end{array}\right] \end{equation}\)

function [C, newweights] = train(weights, layer_sizes,X, Y, alpha)

[C,a,z] = forward(X,Y,layer_sizes,weights); %forward propagetion

[weight_grad] = backward(a,z,layer_sizes,Y,weights); %backward propagation

for i = 1:(size(layer_sizes,2)-1) %update weights (eq 1.13)

newweights{i} = weights{i} - alpha*weight_grad{i};

end

end

Put Things Together

The only source we have is the MNIST data set from MATLAB, to save time we only extract 5000 pictures from the training data set and manually convert them into a data matrix with labels. Labels are subsequently converted into a matrix of one-hot vectors.

clear all;

oldpath = addpath(fullfile(matlabroot,'examples','nnet','main'));

filenameImagesTrain = 'train-images-idx3-ubyte.gz';

filenameLabelsTrain = 'train-labels-idx1-ubyte.gz';

XTrain = processImagesMNIST(filenameImagesTrain);

YTrain = processLabelsMNIST(filenameLabelsTrain);

data = []; %creat data matrix

for i = 1:5000

img = XTrain(:,:,1,i);

v = reshape(img,[28,28]);

vec = reshape(v,1,[]); %turn image into vector

data = [data;vec]; %put vectors together

end

X=double(extractdata(data)); % X [5000,28*28]

y = double(YTrain(1:5000))-1; %extract labels, turn categorical into double

Y = (0:9) == y; %convert into One Hot.

>> [size(X),size(Y)]

ans =

5000 784 5000 10

Training

Only two steps of training on MNIST is not enough, we try 1000 iterations and document the cost for every 100 iterations. To see if our MLP really works, we also compute the precision of the classification within the training set. In this sample MLP we use two hidden layers, each with 50 neurons. This is good enough for our task.

input_layer_size = size(X, 2);

num_labels = size(Y, 2);

layers = [input_layer_size,50,50,num_labels]; %vector of layer sizes

alpha = 1; %learning rate

num_iteration = 1000; %number of iterations

tic;

weights = randWeights(layers); %initialize weights and bias

for i = 1:num_iteration

[C, weights] = train(weights, layers,... %training loop

X, Y, alpha);

if mod(i,100) == 0 %report every 100 iterations

[~,a,~] = forward(X,Y,layers,weights); %make prediction

[~, p] = max(a{end}, [], 2); %argmax of One Hot

pred = p-1;

precision = mean(double(pred == y)) * 100; %calculate precision

disp(['Iteration: ' num2str(i) ' / ' num2str(num_iteration) ' ; Cost: ' num2str(C) ' ; Precission: ' num2str(precision)]);

end

end

time = toc;

disp(['Time spent: ' num2str(time) ' seconds' ])

>> neural_network

Iteration: 100 / 1000 ; Cost: 1.5695 ; Precission: 50.9

Iteration: 200 / 1000 ; Cost: 0.63653 ; Precission: 82.54

Iteration: 300 / 1000 ; Cost: 0.42388 ; Precission: 88.88

Iteration: 400 / 1000 ; Cost: 0.32171 ; Precission: 91.58

Iteration: 500 / 1000 ; Cost: 0.25099 ; Precission: 93.06

Iteration: 600 / 1000 ; Cost: 0.20056 ; Precission: 94.8

Iteration: 700 / 1000 ; Cost: 0.16264 ; Precission: 95.94

Iteration: 800 / 1000 ; Cost: 0.1328 ; Precission: 96.92

Iteration: 900 / 1000 ; Cost: 0.1088 ; Precission: 97.76

Iteration: 1000 / 1000 ; Cost: 0.089292 ; Precission: 98.34

Time spent: 50.6449 seconds

This result definitely makes sense, our neural network is able to learn something from MNIST. So far, we have build this arbitrary layer neural network with a total of merely 86 lines of MATLAB code (excluding importing data set) without any machine learning package.

Let’s print more results!

i = randi(length(y)); %take one sample

[~,a,~] = forward(X,Y,layers,weights);

[~, p] = max(a{end}, [], 2);

pred=p(i)-1;

figure;

imshow(reshape(X(i,:),28,28));

fprintf('True: %d ; Predicted: %d\n',y(i),pred);

>> True class: 3 ; Predicted class: 3

Exercise: Build NN with convolution layers

A convolution layer

](/images/conv.gif)

To do this you just follow the same steps previously

- Specify input/output dimensions and model parameters such as filters, kernel size, strides, padding, etc.

- Derive forward & backward propagations using product rule and chain rule mathematically.

- Make your maths work in simulation.

- Try some automatic differentiation software (TensorFlow, PyTorch… )